Recently, I noticed when there is an issue over at "one of the forum support sites" about "traffic spikes" and I post the well known and undisputed fact that, for most "well established" web sites, bot traffic accounts for over 50% of all traffic in the log files; my posts on that site get promptly deleted.

I have been puzzled as to why anyone at a certain "support site about forums" would delete posts which clearly state accurate and undisputed technical fasts, common knowledge by Internet system engineering and cybersecurity experts. Puzzled, from a technical perspective, I checked out the billing model for "that forum hosting business." Experience over the years have shown all of us that when it comes to matters of money (and affairs of the heart), people will do strange and irrational things.

Why do my posts about bot traffic dominating cyberspace get repeated deleted?

Before I post an image of the business model I am referring to, let's get to some actual statistics; numbers which jive with what I have seen over the last two decades "hands on platforms and logs".

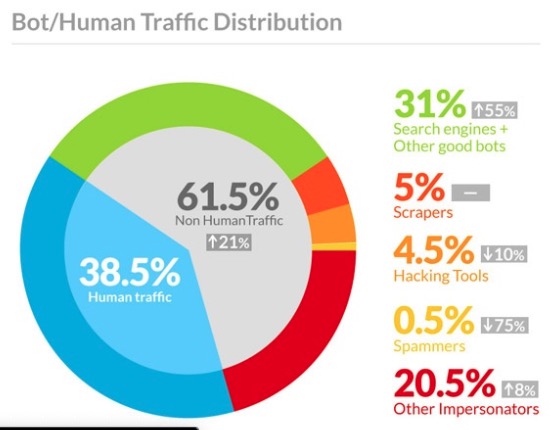

First, let's look at a 2013 online article by Igal Zeifman, Report: Bot traffic is up to 61.5% of all website traffic. In that article, the bottom line is this eye-catching pie chart below, clearly showing that "non human" Internet traffic was over 60%, way back in 2013.

Then, a few years ago, a 2017 article by Adrienne Lafrance (just pulled at random from a Google search), The Internet Is Mostly Bots, More than half of web traffic comes from automated programs—many of them malicious, Lafrance says:

Most website visitors aren’t humans, but are instead bots—or, programs built to do automated tasks. They are the worker bees of the internet, and also the henchmen. Some bots help refresh your Facebook feed or figure out how to rank Google search results; other bots impersonate humans and carry out devastating [DDoS attacks.]

We could enter into a loop and keep searching and posting references, but the results would drive the same conclusion regardless of the number of hours we would spend searching for references - more than half of all traffic on the Internet is generate by bots. It's a fact.

As someone who has been "hands on in the log files of web servers" for two decades, this comes as no surprise to me; or anyone else who actually reviews the log files of their servers on the Internet. The web has been dominated by bots as far back as I can remember. All cybersecurity and data center professionals recognize this undisputed fact. I have written a lot of code over the years to "not show display ads" to bots. It's just part of doing business on the net.

So, why do my innocent, information and knowledge sharing posts on this topic from time to time, end up deleted by the management over at "that hosting company", and others I will not name?

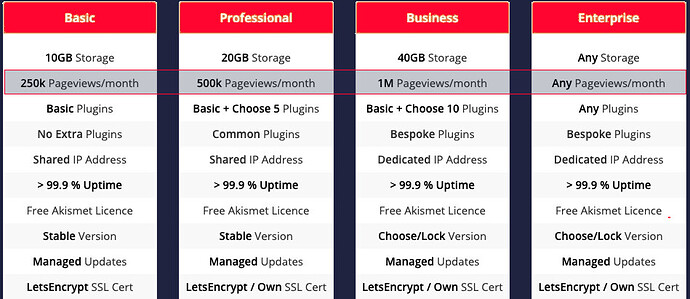

Maybe this picture explains it? Does it have anything to do with the fact that many business model are based on page-views per month?

I actually thought (mistakenly) that the "page views per month" model had fallen by the wayside years ago; but maybe that is because most people I know will not pay for this kind of "per page view" pricing scheme unless the company has a very robust bot detection and classification system (and most do not) and they were only billing based on actually human traffic.

Web-based publishers and advertisers are well aware of this "bot fact of life" as well. Most all Internet advertising companies and publishers market their business as "having sophisticated algorithms to filter out bot traffic" and this way of marketing has been standard for some time. In fact, a few years ago I was on the phone with the CFO of one of the largest tech-related ad networks in the US, and he told me this key fact was at the heart of their customer's concerns and drive their entire business model.

I am surprised anyone would "vanish" my posts on this topic when discussing user-agent filtering, traffic spike, rouge bots, and how to detect and block bots. It's a well known fact, not something open to dispute or even controversy, except it seems, for sites who's revenue model is driven by page views.

My suggestion, as an expert in this field long before these "hosting sites" were in business, is that these businesses must invest in software and hardware ,so they can do what everyone else in this business has been doing for many years, and classify and track all traffic and assure the page-views their customer pay for are all human traffic, in a transparent way; or at least provide them very detailed statistics of the breakdown.

Now long ago, on one of the support sites I am referring to, one of their novice hosting customers were excited to see a huge spike in traffic and asked about the spike. They were in heaven seeing this spike; until I explained to them it was a bot. Then, I was not welcome in the discussion! Imagine being the "wet blanket" on the party, explaining to a novice sys admin that their big traffic spike is NOT a huge surge is new human readers. There is no wonder why I'm not popular at these sites :). The truth is not what people want to hear.

In my constant child-like innocence, I thought I was being helpful to explain to the novice system admin that these spikes in traffic are very common and happen randomly on the net. We never know when a rouge bot will hit a site; but what we do know is that most random traffic spikes are caused by bots. This is just a fact of life in cyberspace. However, after posting "just the facts, ma'am" my post quickly vanished from cyberspace, forever.

How much web traffic is bot traffic?

The answer is that it varies.

Some days bot traffic can be less than half, other (rare) days it might be 80% to 90% of the traffic totals. Most bots these days do not "identify themselves" as bots and so simple logging and filtering on the identifying UA (user agent) strings is not a viable detection strategy.

What is a viable detection strategy for bots?

Stay tunes and I'll discuss that in a future article.