Hello,

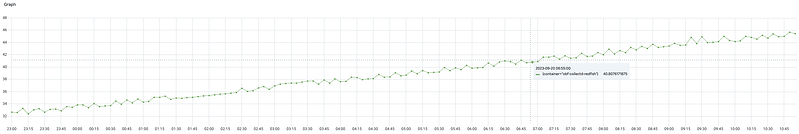

I am using below shell script which gets called in every one minute from crontab. But there is a continuous memory increase on container which causes restart due to that while there is no other process on system.

If somebody can help whats going wrong here OR Is there any issue when we schedule anything from Crontab using CentOS7.

#!/bin/bash

process_api_output() {

local redfish_url="$1"

local log_dir=${2:-"redfish"}

local log_time=$(date +%d%b%y_%H)

local log_dir_path="/log/$log_dir"

local log_file="${log_dir_path}/redfish#uhn7.local#-${log_time}.log"

local error_log_file="${log_dir_path}/redfish_error#uhn7.local#-${log_time}.log"

local tmp_log_file="${log_dir_path}/redfish_tmp.log"

chmod -R 777 ${log_dir_path}

# Retrieve the API output directly without storing it in a variable

local API_OUTPUT

API_OUTPUT=$(curl --connect-timeout 20 --max-time 40 -g -vsS -k -u test:test -H 'Content-type:application/json' "$redfish_url" 2>> "$tmp_log_file")

# Remove newline, carriage return, and form feed characters from the log file and append the error log

tr -d '\n\r\f' < "$tmp_log_file" | tee -a "$error_log_file"

# Remove the temporary log file

rm "$tmp_log_file"

# Process the API output and append it to the main log file along with the timestamp

local API_OUTPUT_SUBSTR="${API_OUTPUT:1:-1}"

echo "{$API_OUTPUT_SUBSTR,\"Logtime\":\"$(date)\"}" >> "$log_file"

echo -e "\n" >> "$log_file"

echo -e "\n" >> "$error_log_file"

unset API_OUTPUT API_OUTPUT_SUBSTR

}

# Usage example:

process_api_output "https://[<ip>]/redfish/v1/Managers/Self/OemSensors"

process_api_output "https://[<ip>]/redfish/v1/Managers/Self/LogServices/SEL"

process_api_output "https://[<ip>]/redfish/v1/Managers/Self/LogServices/SEL/Entries"

timeout 30 ipmitool sdr list | grep -i FPGA |awk '

BEGIN { ORS = ""; print "{ "}

{ printf "%s\"%s\" : \"%s\"",

separator, $1, $3

separator = ", "

}

END { print " } \n" }' >> /log/redfish/fpga#uhn7.local#-$(date +%d%b%y_%H)_test.log;

trim(){

echo $@

}

OUTPUT=$(timeout 30 ipmitool -U admin -P 'xxxxx' raw 0x36 0x66 0x4c 0x1C 0x00 0x01)

DATE=$(date)

echo \{\"FAULT_LED\":\"`trim $OUTPUT`\",\"Logtime\":\"$DATE\"\}>> /log/redfish/fpga#uhn7.local#-$(date +%d%b%y_%H)_test.log